This just in from American Cop Magazine: "... Video capabilities are of special concern to street cops and investigators. This is one area which can make or break your case in the first 24 hours and is one of the greatest investigative tools we have, but unless you can get it right away, it has less worth to the investigator. Don’t get me wrong; it’s still valuable to the case, as defense attorneys really hate to see their buck-toothed, mouth-breathing client’s smiling face as he shoves a cheap chrome revolver in the store clerk’s face and announces, “give me the money.” Street cops and detectives need to use that video evidence to make a quick arrest, get the cretin off the street and protect the public from the danger these people pose.

What if a street cop, investigator or crime scene investigator could run to the station or to their laptop in the car, plug a USB drive or CD into a department Windows PC based computer and evaluate video evidence to see if it’s viable for enhancement and even enhance the video right on the spot? Now, that’s what you’d call a real investigative tool. It would be instant gratification to be able to pull out a face obscured by shadows and say, “Hey, that’s little Aloysius Farnsworth MacGillacutty, that little slime-ball hangs out over on Rose and Spruce. Let’s go snatch him up and ruin his day.”

If your department has Amped Five Software available, you just might be able to do exactly that ..."

The word's getting out about forensic video analysis - even in the mainstream LE press. How cool is that?!

This blog is no longer active and is maintained for archival purposes. It served as a resource and platform for sharing insights into forensic multimedia and digital forensics. Whilst the content remains accessible for historical reference, please note that methods, tools, and perspectives may have evolved since publication. For my current thoughts, writings, and projects, visit AutSide.Substack.com. Thank you for visiting and exploring this archive.

Featured Post

Welcome to the Forensic Multimedia Analysis blog (formerly the Forensic Photoshop blog). With the latest developments in the analysis of m...

Wednesday, August 29, 2012

Tuesday, August 28, 2012

Video and Image Analysis Tool Kit

Evidence Technology Magazine has many of the popular FVA tools featured in their current edition. Check it out by clicking here.

Monday, August 27, 2012

eForensics mag debuts

There's a new publication on the market - eForensics Magazine. Our friend Martino from Amped Software has a featured article in the debut issue.

An Introduction to Image and Video Forensics

"Surveillance cameras, photo enabled cell phones, and fully featured digital cameras are present almost everywhere in our lives. Martino Jerian presents an introduction to image and video forensics."

Check it out.

An Introduction to Image and Video Forensics

"Surveillance cameras, photo enabled cell phones, and fully featured digital cameras are present almost everywhere in our lives. Martino Jerian presents an introduction to image and video forensics."

Check it out.

Friday, August 24, 2012

Channel Mixer in Amped Five

This just in from AmpedSoftware: "Another new feature has just been added to Amped Five from a suggestion by our friend George Reis, Forensic Image Analyst at Imaging Forensics and author of Photoshop CS3 for Forensics Professionals. The new filter is called Channel Mixer, and it is something familiar to many long time users of commercial photo editing software ..."

To read more about it, click here.

To read more about it, click here.

Thursday, August 23, 2012

AmpedSoftware on Twitter

To get the latest info on updates and new features, check out AmpedSoftware on Twitter: @AmpedSoftware.

Wednesday, August 22, 2012

Contrast Stretching

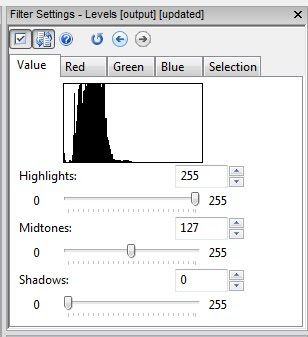

Contrast stretching (sometimes called normalization) is a simple image enhancement technique that attempts to improve the contrast in an image by expanding the range of intensity values it contains.

When you first examine your image, what do you see? Here, the image displayed is very dark. Notice the values in histogram are to the very left of centre. To correct this, we'd rather the values be a nice, bell shaped curve centred in the middle. To correct this, we'd like to take what's there (0-90) and stretch it across the 0-255 range.

After Contrast Stretching, the values are now spread across the 0-255 range - resulting in a lighter image / video.

Getting there is different in Photoshop vs. AmpedFIVE.

In AmpedFIVE, the process is controlled by the user. The mode, Intensity or Color, is user selectable. The Sensitivity adjustment is like a nudge - how much pixel data to exclude. These two controls taken together allow for more refined control over the process.

In Photoshop, the closest thing to contrast stretching is the Equalize command (Image>Adjustments>Equalize). With Equalize, the darkest value gets mapped to 0 and the lightest value gets mapped to 255. Photoshop then remaps the middle values so that they are evenly redistributed.

Try it in PS. A couple of things will become evident. No SmartObjects. Not editable. Try it in AmpedFIVE. It's editable. And ... because changes aren't written to the pixels until you write the file out, it can be done in a non-destructive way.

When you first examine your image, what do you see? Here, the image displayed is very dark. Notice the values in histogram are to the very left of centre. To correct this, we'd rather the values be a nice, bell shaped curve centred in the middle. To correct this, we'd like to take what's there (0-90) and stretch it across the 0-255 range.

After Contrast Stretching, the values are now spread across the 0-255 range - resulting in a lighter image / video.

Getting there is different in Photoshop vs. AmpedFIVE.

In AmpedFIVE, the process is controlled by the user. The mode, Intensity or Color, is user selectable. The Sensitivity adjustment is like a nudge - how much pixel data to exclude. These two controls taken together allow for more refined control over the process.

In Photoshop, the closest thing to contrast stretching is the Equalize command (Image>Adjustments>Equalize). With Equalize, the darkest value gets mapped to 0 and the lightest value gets mapped to 255. Photoshop then remaps the middle values so that they are evenly redistributed.

Try it in PS. A couple of things will become evident. No SmartObjects. Not editable. Try it in AmpedFIVE. It's editable. And ... because changes aren't written to the pixels until you write the file out, it can be done in a non-destructive way.

Tuesday, August 21, 2012

Processing images and video on really old hardware

One of the things that I test, when looking at using a new piece of software, is how it works across a variety of PCs. I have both desktops and laptops running XP, Vista, and Win7 (and of course Macs). Given that there are many readers who are in government service, and use really old hardware, I want to make sure that my recommendations will be able to be useful to a majority of my readers.

With that in mind, I've noted the progress that Adobe has made in squeezing every last bit of processing power out of the latest processors and GPUs. The installation space needed to install Photoshop, or the Suite, gets bigger with each release. A lot of the feedback that I've received is that people are stuck somewhere between CS2 and CS4 due to the limitations of their PCs.

What to do?

While Adobe's target Photoshop user is the Professional or Prosumer photographer, the assumption is that their customers will cycle their hardware as new software comes along. For the most part - they're right. But, that premise doesn't hold true with government service. The 18 month hardware replacement cycle often gets stretched to five years and beyond.

While Adobe's target is wide, the folks out there building purpose-specific software are targeting a very narrow audience. As an example, AmpedFIVE's code has been highly optimized to run as fast as possible on standard architecture (the low end), through the use of efficient implementation of advanced algorithms. It runs just as well on an old XP desktop, or an old XP laptop, as it does on a new Win7 workstation.

In a time of shrinking budgets, Amped gets its customers' concerns. Not having to replace a room full of computers in order to get the latest and greatest piece of software is a huge deal in government service. It's part of the reason that AmpedFIVE is my new best friend.

With that in mind, I've noted the progress that Adobe has made in squeezing every last bit of processing power out of the latest processors and GPUs. The installation space needed to install Photoshop, or the Suite, gets bigger with each release. A lot of the feedback that I've received is that people are stuck somewhere between CS2 and CS4 due to the limitations of their PCs.

What to do?

While Adobe's target Photoshop user is the Professional or Prosumer photographer, the assumption is that their customers will cycle their hardware as new software comes along. For the most part - they're right. But, that premise doesn't hold true with government service. The 18 month hardware replacement cycle often gets stretched to five years and beyond.

While Adobe's target is wide, the folks out there building purpose-specific software are targeting a very narrow audience. As an example, AmpedFIVE's code has been highly optimized to run as fast as possible on standard architecture (the low end), through the use of efficient implementation of advanced algorithms. It runs just as well on an old XP desktop, or an old XP laptop, as it does on a new Win7 workstation.

In a time of shrinking budgets, Amped gets its customers' concerns. Not having to replace a room full of computers in order to get the latest and greatest piece of software is a huge deal in government service. It's part of the reason that AmpedFIVE is my new best friend.

Monday, August 20, 2012

What is an unmanipulated image?

Here's an interesting link to a discussion on image "manipulation." The conversation reinforces what I've always said about the use of clarification techniques - your work should maintain the integrity of the images' content and context.

Thanks go out to Martino for the link.

Enjoy.

Thanks go out to Martino for the link.

Enjoy.

Friday, August 17, 2012

Image Processing by Point Operations

At their essence, point operations constitute a simple but important class of image processing operations and serve as a good starting point for discussion. These operations change the luminance values of an image and therefore modify how an image appears when displayed (think brightness adjustments). The terminology originates from the fact that point operations take single pixels as inputs. This can be expressed as

g(i,j)=T[f(i,j)]

where T is a grayscale transformation that specifies the mapping between the input image f and the result g, and i,j denotes the row, column index of the pixel. Point operations are a one-to-one mapping between the original (input) and modified (output) images according to some function defining the transformation T.

OK. So what does that have to do with us? Point operations can be used to enhance an image. Details not clearly visible in the original image may become visible upon the application of a point operator. For example, a dark region in an image may become brighter after the operation.

g(i,j)=T[f(i,j)]

where T is a grayscale transformation that specifies the mapping between the input image f and the result g, and i,j denotes the row, column index of the pixel. Point operations are a one-to-one mapping between the original (input) and modified (output) images according to some function defining the transformation T.

OK. So what does that have to do with us? Point operations can be used to enhance an image. Details not clearly visible in the original image may become visible upon the application of a point operator. For example, a dark region in an image may become brighter after the operation.

Thursday, August 16, 2012

Kinesense Player Manager - a closer look

Yesterday, I introduced you to the Kinesense Player Manager. Today, I want to show you a few of the highlights of this cool new program.

Visual people will love the fact that you can see a snapshot of what the player looks like. Player Manager's left most tab/panel presents you with a list of the players installed or present on your system. You can search for others on your system or discs, and you can import new ones through the interface. It'll tell you where it is on your system ... and ... what file types are associated with the player.

When you get a disc and you're not sure about what player will be needed for the files on the disc, simply search for the player based on the file type. Here, I've searched for .60d ... and it correctly returned the QuickWave player. Remember, this is on your computer ... it's searching for players on your computer ... not over the internet.

And ... how cool is this ... you no longer have to be embarrassed to say that you use GSpot. Player Manager will give you codec information and potential sources to find the codec installer on the web. Just point it at a file and it does the work for you.

Kinesense Player Manager is a cool new product that's worth a look. I think you'll be impressed.

Visual people will love the fact that you can see a snapshot of what the player looks like. Player Manager's left most tab/panel presents you with a list of the players installed or present on your system. You can search for others on your system or discs, and you can import new ones through the interface. It'll tell you where it is on your system ... and ... what file types are associated with the player.

When you get a disc and you're not sure about what player will be needed for the files on the disc, simply search for the player based on the file type. Here, I've searched for .60d ... and it correctly returned the QuickWave player. Remember, this is on your computer ... it's searching for players on your computer ... not over the internet.

And ... how cool is this ... you no longer have to be embarrassed to say that you use GSpot. Player Manager will give you codec information and potential sources to find the codec installer on the web. Just point it at a file and it does the work for you.

Kinesense Player Manager is a cool new product that's worth a look. I think you'll be impressed.

Wednesday, August 15, 2012

Kinesense Player Manager

Even though the company is headquartered on the wrong side of the Irish Sea (Scots Wha Hae), the folks at Kinesense have come out with an interesting new product, the Kinesense Player Manager.

According to Kinesense, "PlayerManager comes preloaded with a database with information on 100s of players and file formats but also allows you to bulk import your own collection of players, add and search tags and information specific to your force and region, and export that library to synchronize multiple PCs. PlayerManager also comes with a complete copy of the Kinesense Vid-ID database to help identify file formats when you don’t have an internet connection."

For centrally managed police IT departments, this is a huge (and welcome) addition to our toolbox. Imagine being able to push players to all investigators' desktops as new ones come up. This could also work well at offices of District / State Attorneys and Public Defenders - or private FVA labs.

It's nice to see people thinking of ways to make our lives easier. If you're not familiar with Kinesense, you should check them out soon.

According to Kinesense, "PlayerManager comes preloaded with a database with information on 100s of players and file formats but also allows you to bulk import your own collection of players, add and search tags and information specific to your force and region, and export that library to synchronize multiple PCs. PlayerManager also comes with a complete copy of the Kinesense Vid-ID database to help identify file formats when you don’t have an internet connection."

For centrally managed police IT departments, this is a huge (and welcome) addition to our toolbox. Imagine being able to push players to all investigators' desktops as new ones come up. This could also work well at offices of District / State Attorneys and Public Defenders - or private FVA labs.

It's nice to see people thinking of ways to make our lives easier. If you're not familiar with Kinesense, you should check them out soon.

Tuesday, August 14, 2012

Image enhancement

Years ago, I put a post up noting definitions from McAndrew's book on image processing. I still get the odd question about specifics within those generic definitions. So here's a little deeper look at enhancement operations.

Anil K. Jain (Fundamentals of Digital Image Processing, 1989) defined image enhancement as "accentuation, or sharpening, of image features such as edges, boundaries, or contrast to make a graphic display more useful for display or analysis." Jain goes on to note that the enhancement process doesn't increase the inherent information content in the data. It's like I've always said, we don't change content or context - we just help the trier of fact see and correctly interpret what's there in the image (or video).

One of the issues where we tend to have problems is quantifying the criterion for enhancement. As such, a large number of enhancement techniques are empirical and require interactive procedures in order to obtain satisfactory results. In essence, enhancement is hands-on and participatory. Analysts must make judgements as to what to do, in what order, and at what strength.

With this in mind, I want to spend some time exploring enhancement operations. I'll start today with a bit of a road map.

Enhancement's general operations:

Anil K. Jain (Fundamentals of Digital Image Processing, 1989) defined image enhancement as "accentuation, or sharpening, of image features such as edges, boundaries, or contrast to make a graphic display more useful for display or analysis." Jain goes on to note that the enhancement process doesn't increase the inherent information content in the data. It's like I've always said, we don't change content or context - we just help the trier of fact see and correctly interpret what's there in the image (or video).

One of the issues where we tend to have problems is quantifying the criterion for enhancement. As such, a large number of enhancement techniques are empirical and require interactive procedures in order to obtain satisfactory results. In essence, enhancement is hands-on and participatory. Analysts must make judgements as to what to do, in what order, and at what strength.

With this in mind, I want to spend some time exploring enhancement operations. I'll start today with a bit of a road map.

Enhancement's general operations:

- Point Operations

- Spacial Operations

- Transform Operations

- Pseudocolouring

Point operations include:

- Contrast Stretching

- Noise Clipping

- Window Slicing

- Histogram Modeling

Spacial Operations include:

- Noise Smoothing

- Medial Filtering

- Unsharp Masking

- Low-pass, High-pass, and Bandpass filtering

- Zooming

Transform Operations:

- Linear Filtering

- Root Filtering

- Homomorphic filtering

Pseudocolouring:

- False Colouring

- Pseudocolouring

With these categories and operations in mind, I'll spend some time explaining them and putting them in context in future posts.

Monday, August 13, 2012

NCMF News

I received this in my in-box, so I thought that I'd share. Dr. Grigoras and his staff are doing wonderful work in Denver.

Hello from the National Center for Media Forensics,

As the summer is winding down, we are getting ready for our next incoming cohort of graduate students. We are very excited about this endeavor because it is also the launch of the program in a Hybrid delivery format complimenting online learning with intensive hands-on sessions. If you are interested in becoming a graduate student in the two-year Media Forensics program at the NCMF, please remember that we accept applications for Fall enrollment only. The Fall 2013 MSRA-MF application deadline is February 15, 2013. Keep in mind that with the new Hybrid format, you can attend from anywhere in the world!

Thankfully, MATLAB student pricing is entirely more reasonable than the retail rate. Feel free to use your MATLAB beyond FVA ... exploring new LEGO Mindstorms opportunities.

Hello from the National Center for Media Forensics,

As the summer is winding down, we are getting ready for our next incoming cohort of graduate students. We are very excited about this endeavor because it is also the launch of the program in a Hybrid delivery format complimenting online learning with intensive hands-on sessions. If you are interested in becoming a graduate student in the two-year Media Forensics program at the NCMF, please remember that we accept applications for Fall enrollment only. The Fall 2013 MSRA-MF application deadline is February 15, 2013. Keep in mind that with the new Hybrid format, you can attend from anywhere in the world!

Thankfully, MATLAB student pricing is entirely more reasonable than the retail rate. Feel free to use your MATLAB beyond FVA ... exploring new LEGO Mindstorms opportunities.

Friday, August 10, 2012

Quantization

Folks often refer to digital video as "much better than the old tapes." Somehow, the fact that we've progressed to digital video recorders has translated into people's minds as "better" and "an improvement." But is it?

More often than not, the DVRs that we see at crime scenes are the sub-500 dollar models from the local big box store. The purchasers are also the installers, choosing not to change the default settings. These default settings are usually CIF resolution and very lossy compression. But that's only part of the story.

The simple way of explaining where the problems begin is when the analogue camera signal meets the digital video recorder. If the camera has a type of CVBS connector (on consumer products a yellow RCA connector is typically used for composite video), it's analogue. If it has a network type plug (twisted pair Cat5, Cat5e, or Cat6 cables using 8P8C (RJ-45) modular connectors with T568A or T568B wiring), it's digital.

Quantization, in digital signal processing, is the process of mapping a large set of input values to a smaller set. A device or algorithmic function that performs quantization is called a quantizer. The error introduced by quantization is referred to as quantization error or round-off error. Oops ... error and round-off both imply loss. Loss isn't good. I thought digital was better?!

Look at the graph. In rounding-off, sometimes the grid is above the wave, sometimes it's below. The accuracy depends on the algorithm's parameters. What does this mean in practical terms?

Let's say you bought an expensive dome camera that sends 570TVL (television lines of resolution) of signal down the cable. TVL is also known as horizontal lines of resolution. But, an NTSC analog video picture is composed of 480 active horizontal lines. What gives?! Apples and oranges?

Here's the word from Bosch:

"Digitized NTSC video at CIF resolution is an image that is 352x240. It intentionally matches the NTSC 240 horizontal lines in a field so that there is a one-to-one conversion from analog to digital CIF. However, the 352 only produces a theoretical 264 TVL (remember 75 percent of 352), which does not match the 330, 380, 480 ,or 540 TVL from the cameras – so what happens to the extra vertical lines of resolution? They are lost. At CIF, any camera capable of delivering over 264 TVL will not appear superior: 264 TVL and 1,000 TVL cameras will produce identical images.

When compression solutions use CIF resolution, they typically use every other field and simply discard the others – so a CIF system throws away half the video information from the camera. Because the eye is good at averaging things, it’s not that noticeable, but this cuts the vertical resolution in half, and makes the resulting video “jerky” and less smooth."

Throws away half the video? Keeps every other line? Wow! Remember, CIF is often the default setting in consumer grade DVRs. So, we add round-off error to throwing away every other line? Oops. (Here's a handy resolution chart. Do the math. Divide the source resolutions by CIF. If you get a remainder, there's loss)

At it's essence, quantization is part of the process of getting the signal into the DVR and stored/displayed. It's one step in the process - but an important step. Depending on how its done will dictate how much/little loss occurs.

More often than not, the DVRs that we see at crime scenes are the sub-500 dollar models from the local big box store. The purchasers are also the installers, choosing not to change the default settings. These default settings are usually CIF resolution and very lossy compression. But that's only part of the story.

The simple way of explaining where the problems begin is when the analogue camera signal meets the digital video recorder. If the camera has a type of CVBS connector (on consumer products a yellow RCA connector is typically used for composite video), it's analogue. If it has a network type plug (twisted pair Cat5, Cat5e, or Cat6 cables using 8P8C (RJ-45) modular connectors with T568A or T568B wiring), it's digital.

Quantization, in digital signal processing, is the process of mapping a large set of input values to a smaller set. A device or algorithmic function that performs quantization is called a quantizer. The error introduced by quantization is referred to as quantization error or round-off error. Oops ... error and round-off both imply loss. Loss isn't good. I thought digital was better?!

Look at the graph. In rounding-off, sometimes the grid is above the wave, sometimes it's below. The accuracy depends on the algorithm's parameters. What does this mean in practical terms?

Let's say you bought an expensive dome camera that sends 570TVL (television lines of resolution) of signal down the cable. TVL is also known as horizontal lines of resolution. But, an NTSC analog video picture is composed of 480 active horizontal lines. What gives?! Apples and oranges?

Here's the word from Bosch:

"Digitized NTSC video at CIF resolution is an image that is 352x240. It intentionally matches the NTSC 240 horizontal lines in a field so that there is a one-to-one conversion from analog to digital CIF. However, the 352 only produces a theoretical 264 TVL (remember 75 percent of 352), which does not match the 330, 380, 480 ,or 540 TVL from the cameras – so what happens to the extra vertical lines of resolution? They are lost. At CIF, any camera capable of delivering over 264 TVL will not appear superior: 264 TVL and 1,000 TVL cameras will produce identical images.

When compression solutions use CIF resolution, they typically use every other field and simply discard the others – so a CIF system throws away half the video information from the camera. Because the eye is good at averaging things, it’s not that noticeable, but this cuts the vertical resolution in half, and makes the resulting video “jerky” and less smooth."

Throws away half the video? Keeps every other line? Wow! Remember, CIF is often the default setting in consumer grade DVRs. So, we add round-off error to throwing away every other line? Oops. (Here's a handy resolution chart. Do the math. Divide the source resolutions by CIF. If you get a remainder, there's loss)

At it's essence, quantization is part of the process of getting the signal into the DVR and stored/displayed. It's one step in the process - but an important step. Depending on how its done will dictate how much/little loss occurs.

Thursday, August 9, 2012

Critical Fusion Frequency

Take a light and switch it on and off. When the switching is slow, the individual flashes are distinguishable. Speed the flashes up, and it becomes harder to distinguish the individual flashes from each other.

The graph below, taken from an old text book, shows the typical vision system's response.

As the frequency increases, our sensitivity (or ability to distinguish between individual flickers) decreases. At the critical fusion frequency (CFF), the flashes are indistinguishable from a steady light of the same average intensity. Interestingly, the CFF is different for each of us, as demonstrated here for patients with diseases of the eye. (a side note: do autistics have a higher CFF? This might explain why fluorescent lights bother autistic people so much ... No, Sarge, I'd rather not turn on the lights in here).

This same phenomenon also explains why we can generally see the stutter in video when the frame rate drops below 20 frames per second. Frames per second can thus be seen as the frequency of video, yes? Is it a coincidence that interlaced video is 60 fields per second (NTSC) and power line frequency is 60Hz? PAL is 50 fields in a country with a power line frequency of 50Hz? Hmmm.

The graph below, taken from an old text book, shows the typical vision system's response.

As the frequency increases, our sensitivity (or ability to distinguish between individual flickers) decreases. At the critical fusion frequency (CFF), the flashes are indistinguishable from a steady light of the same average intensity. Interestingly, the CFF is different for each of us, as demonstrated here for patients with diseases of the eye. (a side note: do autistics have a higher CFF? This might explain why fluorescent lights bother autistic people so much ... No, Sarge, I'd rather not turn on the lights in here).

This same phenomenon also explains why we can generally see the stutter in video when the frame rate drops below 20 frames per second. Frames per second can thus be seen as the frequency of video, yes? Is it a coincidence that interlaced video is 60 fields per second (NTSC) and power line frequency is 60Hz? PAL is 50 fields in a country with a power line frequency of 50Hz? Hmmm.

Wednesday, August 8, 2012

Mach band effect

You've heard of the CSI effect. It tends cause problems in getting folks back to reality. Part of the problem is that folks believe what they see, both on TV and in front of them as they sit in the jury box. But is seeing really believing?

What we're seeing here is the Mach band effect - where apparent brightness is not uniform (transitions seem brighter on the bright side and darker on the dark side). This effect was first described by Ernst Mach, the famous supersonic physicist that gave us the Mach numbering system (M=V/a). The undershoots and overshoots (red line) illustrate the effect.

Remember that this is a perceived effect. The change in luminance is a hard step. The change in brightness has the under/over shoots - giving the appearance of a gradient. Remember, brightness and luminance aren't the same thing.

Want to see more? Click here to head over to Duke University and see for yourself.

Tuesday, August 7, 2012

simultaneous contrast

You've seen this exercise before. Are the centre objects the same? Use your eyedropper and sample a pixel. What values for RGB do you find? Are they the same?

What's going on that tricks you into thinking that they are different? If they're the same RGB values, why do they look different?

The way our eyes and brains interact, the luminance of an object is independent of the luminances of the surrounding objects. But, the brightness of an object is the perceived luminance and depends on the luminances of the surrounding objects. Thus, two objects with different surroundings could have identical luminances but different brightnesses. The bottom grouping has a light background and makes the centre look darker than the centre of the top - brightness depends on the luminance of the surrounding object.

For more information on simultaneous contrast, click here.

Monday, August 6, 2012

Scotopic Vision

We were watching the History Channel the other day and one of my kids asked about why all the night shots were green. Kids ask the craziest things.

The long/thin photoreceptors in the eyes, the rods, are responsible for vision under low light conditions. They provide Scotopic vision - low light vision. The term comes from Greek skotos meaning darkness and -opia meaning a condition of sight.

As it turns out, the rods are most sensitive to wavelengths of light around 498 nm (green-blue). In the graph above, the green bell-shaped curve represents scotopic vision (the rods) and the black represents photopic vision (the cones). The area where the two intersect - where both rods and cones are active - represents mesopic vision.

So, understanding how our eyes work in low light, the creators of night vision technologies peg the displays between 450-550nm - green.

With this answer, my kids responded ... cool.

For more information on the spectral response of the human visual system under scotopic conditions, click here.

The long/thin photoreceptors in the eyes, the rods, are responsible for vision under low light conditions. They provide Scotopic vision - low light vision. The term comes from Greek skotos meaning darkness and -opia meaning a condition of sight.

As it turns out, the rods are most sensitive to wavelengths of light around 498 nm (green-blue). In the graph above, the green bell-shaped curve represents scotopic vision (the rods) and the black represents photopic vision (the cones). The area where the two intersect - where both rods and cones are active - represents mesopic vision.

So, understanding how our eyes work in low light, the creators of night vision technologies peg the displays between 450-550nm - green.

With this answer, my kids responded ... cool.

For more information on the spectral response of the human visual system under scotopic conditions, click here.

Friday, August 3, 2012

Version 2.0 of the blog starts now

I've decided to move the blog away from a single piece of software and change the title of the blog. With this change of focus, I'll be posting more imaging science posts, authentication tips, upcoming training offerings, and legal updates.

You can see this new focus in action at the LEVA conference as I present Image Processing Fundamentals.

Here we go. It's going to be fun. ...

You can see this new focus in action at the LEVA conference as I present Image Processing Fundamentals.

Here we go. It's going to be fun. ...

Thursday, August 2, 2012

Image Processing Fundamentals

Here's a little blurb on what I'll be presenting at this year's LEVA Conference in San Diego:

Image Processing Fundamentals

Our juries are becoming more sophisticated. They're demanding deeper responses and better explanations to questions about your clarification techniques. Part of your testimony (telling your story) now involves framing the science of your analysis in a way that your jury can understand. Who are Joseph Fourier and Carl Friedrich Gauss? What do they have to do with your work as an analyst?

In this session, we move beyond workflows and checklists and enlist the help of some of the legends of mathematics to focus on the science that lies beneath the surface of the filters that we use every day.

I hope to see you all there.

Image Processing Fundamentals

Our juries are becoming more sophisticated. They're demanding deeper responses and better explanations to questions about your clarification techniques. Part of your testimony (telling your story) now involves framing the science of your analysis in a way that your jury can understand. Who are Joseph Fourier and Carl Friedrich Gauss? What do they have to do with your work as an analyst?

In this session, we move beyond workflows and checklists and enlist the help of some of the legends of mathematics to focus on the science that lies beneath the surface of the filters that we use every day.

I hope to see you all there.

Wednesday, August 1, 2012

DVD copy as best evidence

This just in from the UK's David Thorne: "... When we examined the individual archive files we found that whilst the player was displaying the pink screen it had not displayed the last few frames from the end of the archive. It was also discovered that there was a gap between the actual last frame of the archive and the start of the next archive. These gaps differed in length between each archive from 0.4 and 41.40 seconds which suggested that the system was set to record using motion detection and once triggered it then recorded for a set time and then stopped not starting again until motion was detected.

This meant that the chronology of events was distorted dependant on the delay between recordings. And if that wasn’t enough we found that the compression format used for converting of the screen capture to the DVD format had not converted all of the unique frames thus meaning that even more of the original frames were missing from the DVD. The DVD format also changes the detail in the images but I’ll save that for another time.

There is no suggestion that this was done deliberately to hide material but it was certainly misleading and could have, if allowed to go to court, been damaging to the case ..."

This meant that the chronology of events was distorted dependant on the delay between recordings. And if that wasn’t enough we found that the compression format used for converting of the screen capture to the DVD format had not converted all of the unique frames thus meaning that even more of the original frames were missing from the DVD. The DVD format also changes the detail in the images but I’ll save that for another time.

There is no suggestion that this was done deliberately to hide material but it was certainly misleading and could have, if allowed to go to court, been damaging to the case ..."

Subscribe to:

Comments (Atom)