The recent update to Amped SRL's flagship tool, FIVE, brought some UX headaches for many US-based users. You see, the redesigned reporting function does a something new and unexpected. Let's take a look, and offer a couple of work-arounds.

Let's say you're used to your Evidence.com work flow, the one where all your evidence goes into a single folder for upload, and you're processing files for a multi-defendant case. If there are files featuring only one of the defendants, which happens often, you'll want to have separate project (.afp) files for each evidence item. This will make tagging easier. This will make discovery easier. This will make the new reporting functionality become non-functional.

You see, the new reporting feature doesn't just create a report, it creates a folder to hold the report and the Amped SRL logos - and calls the folder "report." That's fine for the first file that you process. But the next project? Well, when you go to generate the report, FIVE will see that there's a "report" folder there already. What does it do? Does it prompt you to say, "hey! there's already a folder with that name. What do you want to do?" Of course not. Not expecting a new reporting behavior, and a complete lack of documentation of this new reporting format, you'll just keep processing away. At the end of your work, there's only one folder and only one report file.

The work-around on your desktop is to put each evidence item into it's own folder, within the case folder. It's an extra step, I know. You'll also have to modify the "inbox" that E.com is looking for.

The other weird issue is that FIVE now drops some logos and a banner as loose files in the report folder. I'm sure that this is due to FIVE's processing of the report - first to HTML - then to PDF via some freeware. One would think that in choosing PDF you wouldn't receive the side-car files, but you would be thinking wrong. Again, this has to do with the way the bit of freeware processes the report.

As an interesting aside, in Daubert Hearing, I actually got a cross examination question that hinted at Amped SRL being pirates of software. Is anything in the product an original creation or is it just pieced together bits and such? But, I digress.

Remember, in the US, anything created in the process of analysis should be preserved and disclosed. One customer complained that it seems as though Amped SRL is throwing an extra business card in on the case file. I don't know about that. But, it does seem a bit odd for a forensic science tool to behave in such a way.

You can always revert to the previous version if you want to save time and preserve your sanity. This new version doesn't add much for the analyst anyway. You can easily install previous versions. It takes only a few minutes each time.

As with anything forensic science, always validate new releases of your favorite tools prior to deploying them on casework. If you're looking for an independent set of eyes, and would like to outsource your tool validation, we're here to help.

Have a great new year my friends.

This blog is no longer active and is maintained for archival purposes. It served as a resource and platform for sharing insights into forensic multimedia and digital forensics. Whilst the content remains accessible for historical reference, please note that methods, tools, and perspectives may have evolved since publication. For my current thoughts, writings, and projects, visit AutSide.Substack.com. Thank you for visiting and exploring this archive.

Featured Post

Welcome to the Forensic Multimedia Analysis blog (formerly the Forensic Photoshop blog). With the latest developments in the analysis of m...

Monday, December 30, 2019

Sunday, December 22, 2019

Human Anatomy & Physiology

In my private practice, I often review the case work of others. This "technical review" or validation is provided for those labs that either require an outside set of eyes by policy / statute, or for those small labs and sole-proprietors who will occasionally require these services.

Recently, I was reviewing a case package related to a photographic comparison of "known" and "unknown" facial images. In reviewing the report, it was clear that the examiner had no training or education in basic human anatomy & physiology. Their terminology was generic and confusing, avoiding specific names for specific features. It made it really hard to follow their train of though, which made it hard to understand the basis for their conclusion.

Many in police service either lack a college degree, or have a degree in criminal justice / administration of justice. These situations mean that they won't have had a specific, college level anatomy / physiology course. Or, it may be that, like me, your anatomy course was so long ago that you find yourself needing a refresher.

I got my refresher via a certificate program from the University of Michigan, on-line. But, if you don't have the time or patience to work through the weekly lessons, or if you're looking for a lower cost option, I've found an interesting alternative.

In essence, I've found a test-prep course that's designed to help doctors and medical professionals learn what they're supposed to know, and help them pass their tests. It's reasonably priced and gives you the whole program on your chosen platform.

The Human Anatomy & Physiology Course by Dr. James Ross (click here) even comes with illustrations that you could incorporate in your reports. Check it out for yourself.

You don't necessarily have to have training / education in anatomy to opine on a photographic comparison. Not all jurisdictions will care. But, it really ups your reporting game if you know what the different pieces and parts are called, and if you use professional looking graphics in your report.

If you need help or advice, please feel free to ask. I'm here to help.

Have a great day my friends.

Recently, I was reviewing a case package related to a photographic comparison of "known" and "unknown" facial images. In reviewing the report, it was clear that the examiner had no training or education in basic human anatomy & physiology. Their terminology was generic and confusing, avoiding specific names for specific features. It made it really hard to follow their train of though, which made it hard to understand the basis for their conclusion.

Many in police service either lack a college degree, or have a degree in criminal justice / administration of justice. These situations mean that they won't have had a specific, college level anatomy / physiology course. Or, it may be that, like me, your anatomy course was so long ago that you find yourself needing a refresher.

I got my refresher via a certificate program from the University of Michigan, on-line. But, if you don't have the time or patience to work through the weekly lessons, or if you're looking for a lower cost option, I've found an interesting alternative.

In essence, I've found a test-prep course that's designed to help doctors and medical professionals learn what they're supposed to know, and help them pass their tests. It's reasonably priced and gives you the whole program on your chosen platform.

The Human Anatomy & Physiology Course by Dr. James Ross (click here) even comes with illustrations that you could incorporate in your reports. Check it out for yourself.

You don't necessarily have to have training / education in anatomy to opine on a photographic comparison. Not all jurisdictions will care. But, it really ups your reporting game if you know what the different pieces and parts are called, and if you use professional looking graphics in your report.

If you need help or advice, please feel free to ask. I'm here to help.

Have a great day my friends.

Monday, December 16, 2019

Independence Matters

Independence matters. When learning new materials, it's important to get an unvarnished view of the information. Too many "official" vendor-sponsored training sessions aren't at all training to competency - they're information sessions, at best, or marketing sessions, at worse.

Vendors generally don't want their employees and contractors to point out the problems with their tools. But as a practitioner, you'll want to know the limitations of that thing you're learning. You'll want to know what it can do, what it does well, and what it doesn't do - or does wrong.

Now, I understand that when people start showing you their resume, they've generally lost the argument. But, in this case, it's an important point of clarification. I do so to show you the value proposition of independent training providers like me. Having been trained and certified by California's POST for curriculum development and instruction means that I understand the law enforcement context. Yes, I was a practitioner who happened to be in police service. The training by POST takes that practitioner information and focuses it, refines it, towards creating and delivering valid learning events. Having an MEdID - a Masters of Education in Instructional Design, means that I've proven that I know how to design instructional programs that will achieve their instructional goals. The PhD in Education is just the icing on the cake, but proves that I know how to conduct research and report my results - an important skillset for evaluating the work of others.

Unlike many of the courses on offer in this space, the courses that I've designed and deliver for the forensic sciences are "competency based." I'm a member of the International Association for Continuing Education and Training (IACET) and follow their internationally recognized Standard for Competency Based Learning.

According to the IACET's research, the Competency-Based Learning (CBL) standard helps organizations to:

This is why, when delivering "product training," I've always wrapped a complete curriculum around the product. I've never just taught FIVE, for example. I've introduced the discipline of forensic digital / multimedia analysis, using the platform (FIVE) to facilitate the work. This is what competency based learning looks like.

Yes, you need to know what buttons to push, and in what order, but you also need to know when it's not appropriate to use the tool and when, how, and why to report inclusive results. You'll need to know how to facilitate a "technical review" and package your results for an independent "peer review." You'll also need to know that there may be a better tool for a specific job - something a manufacturer's representative won't often share.

Align learning with critical organizational imperatives - I've studies the 2009 NAS report. I understand it's implications. The organization that the analyst serves is the Trier of Fact, not the organization that issues their pay. The courses that I've designed and deliver match not only with the US context, but also align with the standards produced in the UK, EU, India, Australia, and New Zealand (many other countries choose to align their national standards with one of these contexts).

Vendors generally don't want their employees and contractors to point out the problems with their tools. But as a practitioner, you'll want to know the limitations of that thing you're learning. You'll want to know what it can do, what it does well, and what it doesn't do - or does wrong.

Now, I understand that when people start showing you their resume, they've generally lost the argument. But, in this case, it's an important point of clarification. I do so to show you the value proposition of independent training providers like me. Having been trained and certified by California's POST for curriculum development and instruction means that I understand the law enforcement context. Yes, I was a practitioner who happened to be in police service. The training by POST takes that practitioner information and focuses it, refines it, towards creating and delivering valid learning events. Having an MEdID - a Masters of Education in Instructional Design, means that I've proven that I know how to design instructional programs that will achieve their instructional goals. The PhD in Education is just the icing on the cake, but proves that I know how to conduct research and report my results - an important skillset for evaluating the work of others.

Unlike many of the courses on offer in this space, the courses that I've designed and deliver for the forensic sciences are "competency based." I'm a member of the International Association for Continuing Education and Training (IACET) and follow their internationally recognized Standard for Competency Based Learning.

According to the IACET's research, the Competency-Based Learning (CBL) standard helps organizations to:

- Align learning with critical organizational imperatives.

- Allocate limited training dollars judiciously.

- Ensure learning sticks on the job.

- Provide learners with the tools needed to be agile and grow.

- Improve organizational outcomes, return on mission, and the bottom line.

This is why, when delivering "product training," I've always wrapped a complete curriculum around the product. I've never just taught FIVE, for example. I've introduced the discipline of forensic digital / multimedia analysis, using the platform (FIVE) to facilitate the work. This is what competency based learning looks like.

Yes, you need to know what buttons to push, and in what order, but you also need to know when it's not appropriate to use the tool and when, how, and why to report inclusive results. You'll need to know how to facilitate a "technical review" and package your results for an independent "peer review." You'll also need to know that there may be a better tool for a specific job - something a manufacturer's representative won't often share.

Align learning with critical organizational imperatives - I've studies the 2009 NAS report. I understand it's implications. The organization that the analyst serves is the Trier of Fact, not the organization that issues their pay. The courses that I've designed and deliver match not only with the US context, but also align with the standards produced in the UK, EU, India, Australia, and New Zealand (many other countries choose to align their national standards with one of these contexts).

Allocate limited training dollars judiciously - Whilst most training vendors continue to raise prices, I've dropped mine. I've even found ways to deliver the content on-line as micro learning, to get prices as low as possible. Plus, it helps your agency meet it's sustainability goals.

Ensure learning sticks on the job - Building courses for all learning styles helps to ensure that you'll remember, and apply, your new knowledge and skills.

Provide learners with the tools needed to be agile and grow - this is another reason for building on-line micro learning offerings. Learning can happen when and where you're available, and where and when you need it.

Improve organizational outcomes, return on mission, and the bottom line - competency based training events help you improve your processing speed, which translates to more cases worked per day / week / month. This is your agency's ROM and ROI. Getting the work done right the first time is your ROM and ROI.

I'd love to see you in a class soon. See for yourself what independence means for learning events. You can come to us in Henderson, NV. We've got seats available for our upcoming Introduction to forensic multimedia analysis with Amped FIVE sessions (link). You can join our on-line offering of that course (link), saving a ton of money doing so. Or, we can come to you.

Have a great day, my friends.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Friday, December 13, 2019

Techno Security & Digital Forensics Conference - San Diego 2020

It seems that the Techno Security conference's addition of a San Diego date was enough of a success that they've announced a conference for 2020. This is good news. I've been to the original Myrtle Beach conference several times. All of the big players in the business set up booths to showcase their latest. For practitioners, it's a good place to network and see what's new in terms of tech and technique.

It's good to see the group growing. Myrtle Beach as a bit of a pain to get to. Adding dates in Texas and California helps folks in those states get access and avoid the crazy travel policies imposed by their respective states. Those crazy travel policies are at the heart of my moving my courses on-line.

The sponsor list is a who's-who of the digital forensics industry. It contains everyone you'd expect, except one - LEVA. Why is LEVA, a 501(c)(3) non-profit, a Gold Sponsor? How is it that a non-profit public benefit corporation has about $10,000 to spend on sponsoring a commercial event?

As I write this, I am currently an Associate Member of LEVA. I'm an Associate Member because, in the view of LEVA's leadership, my retirement from police service disqualifies me from full membership. I've been a member of LEVA for as long as I've been aware of the organization, going back to the early 2000's. I've volunteered as an instructor at their conferences, believing in the mission of practitioners giving back to practitioners. But, this membership year (2019) will be my last. Clearly, LEVA is no longer a non-profit public benefit corporation. Their presence as a Gold Sponsor at this conference reveals their profiteering motive. They've officially announced themselves as a "training provider," as opposed to a charity that supports government service practitioners. Thus, I'm out. Let me explain my decision making on this in detail.

Consider what it will take if you choose to maintain the fiction that LEVA is a membership support / public benefit charity.

- Gold Sponsorship is $6300 for one event, or $6000 per event if the organization commits to multiple events. Let's just assume that they've come in at the single event rate.

- Rooms at the event location, a famous golf resort, are advertised at the early bird rate of $219 plus taxes and fees. Remember, the event is being held in California - one of the costliest places to hold such an event. Taxes in California are some of the highest in the country. Assuming only two LEVA representatives travel to work the event, and the duration of the event, lodging will likely run about a thousand per person.

- Add to that the "per diem" that will be paid to the workers.

- Add transportation to / from / at.

- Consider that, as a commercial entity, LEVA will likely have to give things away - food / drink / swag - to attract attention.

The grand total for all of this will exceed $10,000 if done on the cheap. What's the point of being a Gold Sponsor if one intends to do such a show on the cheap? I know that a two-person showing at a conference, whilst I was with Amped Software, ran between $15,000 and $25,000 per show. But, even at $10,000, what is the benefit to the public - the American taxpayer - by this non-profit public benefit organization's attendance at this commercial event? Hint: there is none.

If LEVA is a membership organization, then it would take an almost doubling of the membership - as a result of this one show - to break even on the event. We all know that's not going to happen.

If you need further convincing, let's revisit their organizational description from the sponsor's page. This is the information that LEVA provided to the host. This is the information LEVA wants attendees at the conference to have about LEVA.

Ignoring the obvious misspelling (we all do it sometimes), this statement is in conflict with the LEVA web site's description of the organization:

"LEVA is a non-profit corporation committed to improving the quality of video training and promoting the use of state-of-the-art, effective equipment in the law …"

First, what is a "global standard?" If you're thinking of an international standard for continuing education and training, the relevant "standards body" might be the International Association for Continuing Education and Training (the IACET). LEVA's programs are not accredited by the IACET. How do I know this? As someone who designs and delivers Competency Based Training, I'm an IACET member. I logged in and checked. LEVA is not listed. You don't have to be a member to check my claim. You can visit their page and see for yourself. Do they at least follow the relevant ANSI / IACET standard for the creation, delivery, and maintenance of Competency Based Training programs? You be the judge.

Is LEVA the "only organization in the world that provides court-recorgnized training in video forensics that leads to certification? Of course not. ANSI is a body that accredits certification programs. LEVA is not ANSI accredited. The International Certification Accreditation Council (ICAC) is another. ICAC follows the ISO/IEC 17024 Standards. LEVA is not accredited by ICAC either. Additionally, the presence of the IAI in the marketplace (providing both training events and a certification program) proves the lie of LEVA's marketing. LEVA is not the "only organization" providing training, it's one of many.

LEVA is not the only organization providing certification either. There' the previously mentioned IAI, as well as the ETA-i. Unlike the IAI and LEVA, the ETI-i's Audio-Video Forensic Analyst certification program (AVFA) is not only accredited, but also tracks with the ASTM's E2659 - Standard Practice for Certificate Programs.

Consider the many "certificates of training" that LEVA issued for my information sessions that I facilitated at it's conferences over the years. I was quite explicit in my describing my presence there, and what the session was - an information session. They weren't competency based training events. Yet, LEVA issued "certificates" for those sessions and many of the attendees considered themselves "trained." Section 4.2 of ASTM E2659 speaks to this problem.

Further to the point, Section 4.3 of ASTM E2659 notes "While certification eligibility criteria may specify a certain type or amount of education or training, the learning event(s) are not typically provided by the certifying body. Instead, the certifying body verifies education or training and experience obtained elsewhere through an application process and administers a standardized assessment of current proficiency or competency." The ETA-i meets this standard with it's AVFA certification program. So does the IAI. LEVA does not.

We'll ignore the "court-recognized" portion as completely meaningless. "The court" has "recognized" every copy of my CV ever submitted - if by "recognized" you mean "the Court" accepted its submission - as in, "yep, there it is." But, courts in the US do not "approve" certification or training programs. To include the "court-recognized" statement is just banal.

If you wish, dive deeper into ASTM E2659. Section 5.1 speaks to organizational structure. In 5.1.2.1, the document notes: "The certificate program’s purpose, scope, and intended outcomes are consistent with the stated mission and work of the certificate issuer." Clearly, that's not true in LEVA's case. Are they a non-profit public benefit corporation or are they a commercial training and certification provider? This is an important question for ethical reasons. As a non-profit public benefit organization, they're entitled to many tax breaks not available to commercial organizations. As a non-profit, working in the commercial sphere, they receive an unfair advantage when competing in the market by not having to pay federal taxes on their revenue. Didn't we all take an ethics class at some point? I understand Jim Rome's "if you're not cheating, you're not trying" statements as relates to the New England Patriots and other sports franchises. But this isn't sports. Given their sponsorship statement, LEVA should dissolve as a non-profit public benefit organization and reconstitute itself as a commercial training provider and certificate program.

The commercial nature of LEVA is reinforced by their first ever publicly released financial statement and it's tax filings. More than 80% of it's efforts are training related. It's officers have salaries. It's instructors are paid (not volunteers). Heck, almost 50% of it's expenses are for travel. On paper, LEVA looks like a subsidized travel club for select current / former government service employees.

Then there's the question of the profit. For a non-profit public benefit organization, it's profits are supposed to be rolled back into it's mission of benefiting the public. Nothing about the financial statement shows a benefit to the public. Also, the "Director Salaries" is a bit of a typo. Only one director gets paid. That $60,000 goes to one person. And, on LEVA's 2017 990 filing with the IRS, the director was paid $42,000. Given the growth in revenue from 2017, the 70% raise was likely justified in the minds of the Board of Directors. If you are wondering about the trend, you're out of luck. The IRS' web site only lists two years of returns (2017 & 2018) - meaning it's likely none were filed for years previous.

Another issue with LEVA's status as a non-profit public benefit organization is that of giving back to the community. In all of the publicly available 990 filings, the forms show zero dollars given in grants or similar payouts.

|

| 2017 |

|

| 2018 |

No monies given as grants yet, their current filing shows quite a bit in savings.

|

| 2018 Cash on Hand |

Clearly, LEVA will not miss my $75 annual dues payment with that kind of cash laying around.

If you're still here, let's move away from the financial and go a little farther down in Section 5. In 5.2.1.1 (11), LEVA necessarily and spectacularly fails in terms of (11) - nondiscrimination. LEVA, by it's very nature, discriminates in it's membership structure. It discriminates against anyone who is not not a current government service employee or a "friend of LEVA." This active discrimination (arbitrary classes of members and the denial of membership to those not deemed worthy) would disqualify it from accreditation by any body that uses ASTM E2659 as it's guide.

All of this informs my decision to not renew my LEVA membership. I am personally very charitable. But, I have no intention of giving money to a commercial enterprise that competes with my training offerings. Besides, given their balance sheet, they certainly don't need my money. It's not personal. It's a proper business decision.

Thursday, December 12, 2019

Introduction to Forensic Multimedia Analysis with Amped FIVE - seats available

Seats Available!

Did your agency purchase Amped FIVE / Axon Five, but skipped on the training? Learn the whole workflow - from unplayable file, to clarified and restored video that plays back in court.

Course Date: Jan 20-24, 2020,

Course location: Henderson, NV, USA.

Click here to sign up for Introduction to Forensic Multimedia Analysis with Amped FIVE.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Tuesday, December 10, 2019

Alternative Explanations?

I've sat through a few sessions on the Federal Rules of Evidence. Rarely does the presenter dive deep into the Rules, providing only an overview of the relevant rules for digital / multimedia forensic analysis.

A typical slide will look like the one below:

Those who have been admitted to trial as an Expert Witness should be familiar with (a) - (d). But, what about the Advisory Committee's Notes?

Deep in the notes section, you'll find this preamble:

"Daubert set forth a non-exclusive checklist for trial courts to use in assessing the reliability of scientific expert testimony. The specific factors explicated by the Daubert Court are (1) whether the expert's technique or theory can be or has been tested—that is, whether the expert's theory can be challenged in some objective sense, or whether it is instead simply a subjective, conclusory approach that cannot reasonably be assessed for reliability; (2) whether the technique or theory has been subject to peer review and publication; (3) the known or potential rate of error of the technique or theory when applied; (4) the existence and maintenance of standards and controls; and (5) whether the technique or theory has been generally accepted in the scientific community. The Court in Kumho held that these factors might also be applicable in assessing the reliability of nonscientific expert testimony, depending upon “the particular circumstances of the particular case at issue.” 119 S.Ct. at 1175.

No attempt has been made to “codify” these specific factors. Daubert itself emphasized that the factors were neither exclusive nor dispositive. Other cases have recognized that not all of the specific Daubert factors can apply to every type of expert testimony. In addition to Kumho, 119 S.Ct. at 1175, see Tyus v. Urban Search Management, 102 F.3d 256 (7th Cir. 1996) (noting that the factors mentioned by the Court in Daubert do not neatly apply to expert testimony from a sociologist). See also Kannankeril v. Terminix Int'l, Inc., 128 F.3d 802, 809 (3d Cir. 1997) (holding that lack of peer review or publication was not dispositive where the expert's opinion was supported by “widely accepted scientific knowledge”). The standards set forth in the amendment are broad enough to require consideration of any or all of the specific Daubert factors where appropriate."

Important takeaways from this section:

A typical slide will look like the one below:

Those who have been admitted to trial as an Expert Witness should be familiar with (a) - (d). But, what about the Advisory Committee's Notes?

Deep in the notes section, you'll find this preamble:

"Daubert set forth a non-exclusive checklist for trial courts to use in assessing the reliability of scientific expert testimony. The specific factors explicated by the Daubert Court are (1) whether the expert's technique or theory can be or has been tested—that is, whether the expert's theory can be challenged in some objective sense, or whether it is instead simply a subjective, conclusory approach that cannot reasonably be assessed for reliability; (2) whether the technique or theory has been subject to peer review and publication; (3) the known or potential rate of error of the technique or theory when applied; (4) the existence and maintenance of standards and controls; and (5) whether the technique or theory has been generally accepted in the scientific community. The Court in Kumho held that these factors might also be applicable in assessing the reliability of nonscientific expert testimony, depending upon “the particular circumstances of the particular case at issue.” 119 S.Ct. at 1175.

No attempt has been made to “codify” these specific factors. Daubert itself emphasized that the factors were neither exclusive nor dispositive. Other cases have recognized that not all of the specific Daubert factors can apply to every type of expert testimony. In addition to Kumho, 119 S.Ct. at 1175, see Tyus v. Urban Search Management, 102 F.3d 256 (7th Cir. 1996) (noting that the factors mentioned by the Court in Daubert do not neatly apply to expert testimony from a sociologist). See also Kannankeril v. Terminix Int'l, Inc., 128 F.3d 802, 809 (3d Cir. 1997) (holding that lack of peer review or publication was not dispositive where the expert's opinion was supported by “widely accepted scientific knowledge”). The standards set forth in the amendment are broad enough to require consideration of any or all of the specific Daubert factors where appropriate."

Important takeaways from this section:

- (1) whether the expert's technique or theory can be or has been tested—that is, whether the expert's theory can be challenged in some objective sense, or whether it is instead simply a subjective, conclusory approach that cannot reasonably be assessed for reliability;

- (2) whether the technique or theory has been subject to peer review and publication;

- (3) the known or potential rate of error of the technique or theory when applied;

- (4) the existence and maintenance of standards and controls; and

- (5) whether the technique or theory has been generally accepted in the scientific community.

As to point 1, the key words are "objective" and "reliability." Part of this relates to a point further on in the notes - did you adequately account for alternative theories or explanations? More about this later in the article.

To point 2, many analysts confuse "peer review" and "technical review." When you share your report and results in-house, and a coworker checks your work, this is not "peer review" This is a "technical review." A "peer review" happens when you or your agency sends the work out to a neutral third party to review the work.See ASTM E2196 for the specific definitions of these terms. If your case requires "peer review," we're here to help. We can either review your case and work directly, or facilitate a blind review akin to the publishing review model. Let me know how we can help.

To point 3, there are known and potential error rates for disciplines like photographic comparison and photogrammetry? Do you know what they are and how to find them? We do. Let me know how we can help.

To point 4, either an agency has their own SOPs or they follow the consensus standards found in places like the ASTM. If your work does not follow your own agency's SOPs, or the relevant standards, that's a problem.

To point 5, many consider "the scientific community" to be limited to certain trade associations. Take LEVA, for example. It's a trade group of mostly government service employees at the US/Canada state and local level. Is "the scientific community" those 300 or so LEVA members, mostly in North American law enforcement? Of course not. Is "the scientific community" inclusive of LEVA members and those who have attended a LEVA training session (but are not LEVA members)? Of course not. The best definition "the scientific community" that I've found comes from Scientomony. Beyond the obvious disagreement between how "the scientific community" is portrayed at LEVA conferences vs. the actual study of the scientific mosaic and epistemic agents, a "scientific community" should at least act in the world of science and not rhetoric - proving something as opposed to declaring something.

Further down the page, we find this:

"Courts both before and after Daubert have found other factors relevant in determining whether expert testimony is sufficiently reliable to be considered by the trier of fact. These factors include:

- (3) Whether the expert has adequately accounted for obvious alternative explanations. See Claar v. Burlington N.R.R., 29 F.3d 499 (9th Cir. 1994) (testimony excluded where the expert failed to consider other obvious causes for the plaintiff's condition). Compare Ambrosini v. Labarraque, 101 F.3d 129 (D.C.Cir. 1996) (the possibility of some uneliminated causes presents a question of weight, so long as the most obvious causes have been considered and reasonably ruled out by the expert)."

This statement speaks to the foundations of one's conclusions. In performing a photographic comparison, how many common points between the "known" and the "unknown" equals a match? On what do you base your opinion? SWGIT Section 16 has a nice chart to guide the analyst in explaining the strength of one's conclusion. Those that used this often had a checklist, combined with threshold values - so many common points equals a match.

But can one account for obvious alternative explanations if the quality / quantity of data is minimal? Of course not. This goes to the comedic statement often made, "these five white pixels do not make a car." The obvious alternative explanations are every other car on the planet, or in the greater region if you will.

When I perform a "peer review," the work encompasses not only the technical / scientific aspects of the package of data, but also the rules of evidence. If you failed to account for alternative explanations, I'll let you know. If your report is long on rhetoric and short on science, I'll let you know. If you're an attorney and you're wondering about the other side's work, I'll let you know. In performing this service, it's based in science, standards, and the law - not on rhetoric or my personal standing in the scientific community.

If you need help with a case, Let me know how we can help. We're open for business.

Monday, December 9, 2019

Competition is a good thing

As a certified audio video forensic analyst, I need a platform with which to perform my work. I've been rather clear in the past as to my choice of platform and the reasons for those decisions. But, an honest and ethical analyst must always check assumptions and assess the marketplace. Has the chosen toolset improved or declined vs the types of evidence items under exam today? Has the platform's value proposition changed? Are price increases demanded by vendors justified based on an ROI metric? To be ethical, these decisions should be founded in data and not personality.

Indeed, I'm open for business. But, in order to maintain that business' health, I must regularly assess the state of the industry.

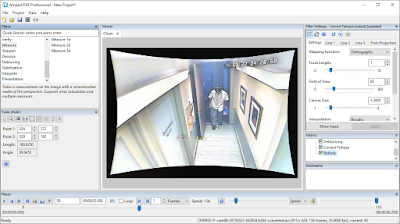

There are essentially three vendors offering tools for the forensic analysis of digital / multimedia evidence. These are, in alphabetical order, Amped SRL, Cognitech, and Occam. Amped SRL offers a variety of tools, as does Cognitech. Occam's primary offering is Input-Ace.

If you've never heard of Cognitech, that's understandable. Unfortunately, their tools aren't effectively marketed. But, that being said, their tools are based on some amazing proprietary algorithms and processes. Those that can decipher their tool's confusing UI and workflow will achieve results not possible in their competition's tools.

Whilst Cognitech continues to expand the science, offering tools and processes straight from image science academia, Occam and Amped are chasing an entirely different audience. Cognitech is attempting to appeal to scientists and analysts. Occam and Amped recent marketing attempts to attract the attention of unskilled investigators.

Amped's Italian CEO announced through his social media channels that their new tool, Replay, will be demonstrated via a webinar soon. The message in the advertisement is that the tool will assist "police officers" and/or "police investigators" to "close more cases."

The problem with this message is that their tool, Replay, doesn't contain any actual analytical capabilities. Thus, untrained and unskilled staff may make the wrong call in closing a case based on what they see in the Replay UI. It's clear from the messaging that Amped SRL, which is based in Italy, doesn't quite understand the legal context outside of Italy.

On the other side of the world, Occam's Input-Ace is being aggressively marketed. It's CEO is on a cross country tour touting the tool and the message of "video literacy." The target audience is the same as Amped's, law enforcement staff.

The message of building "video literacy" is likely the more appropriate in this case. When investigators understand their tool, as well as the results that the tool displays, they can make an informed decision. "Video literacy" as a goal, empowers investigators to engage with analysts - teaching them the language and foundations of the evidence they're viewing.

In the competition between the two tools, Replay and Input-Ace, it seems that the market is deciding. To be sure, competition is a good thing. Competition drives innovation and speeds development. Occam, in partnership with Cellebrite, is offing courses on it's tools all around the US and the world. Learners gain a thorough introduction to the tool, as well as how it's positioned within the processing workflow.

For those learners wanting to dive deeper into the underlying science, standards, and procedures for the analysis of this vital evidence (non-tool specific), my fundamentals course is offered on-line as micro learning. Once your eyes are opened to the tools and the evidence types, you'll need to understand the discipline at a deeper level. Moving this course on-line assists investigators and analysts in achieving this goal on their schedule, and at an affordable price.

If you've examined the evidence, and want a full analysis but lack the tools, that's where I can help. Again, as a certified analyst, I'm open for business. Contact me and let me know how I can assist you and your case.

Have a great day my friends.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Indeed, I'm open for business. But, in order to maintain that business' health, I must regularly assess the state of the industry.

There are essentially three vendors offering tools for the forensic analysis of digital / multimedia evidence. These are, in alphabetical order, Amped SRL, Cognitech, and Occam. Amped SRL offers a variety of tools, as does Cognitech. Occam's primary offering is Input-Ace.

If you've never heard of Cognitech, that's understandable. Unfortunately, their tools aren't effectively marketed. But, that being said, their tools are based on some amazing proprietary algorithms and processes. Those that can decipher their tool's confusing UI and workflow will achieve results not possible in their competition's tools.

Whilst Cognitech continues to expand the science, offering tools and processes straight from image science academia, Occam and Amped are chasing an entirely different audience. Cognitech is attempting to appeal to scientists and analysts. Occam and Amped recent marketing attempts to attract the attention of unskilled investigators.

Amped's Italian CEO announced through his social media channels that their new tool, Replay, will be demonstrated via a webinar soon. The message in the advertisement is that the tool will assist "police officers" and/or "police investigators" to "close more cases."

The problem with this message is that their tool, Replay, doesn't contain any actual analytical capabilities. Thus, untrained and unskilled staff may make the wrong call in closing a case based on what they see in the Replay UI. It's clear from the messaging that Amped SRL, which is based in Italy, doesn't quite understand the legal context outside of Italy.

On the other side of the world, Occam's Input-Ace is being aggressively marketed. It's CEO is on a cross country tour touting the tool and the message of "video literacy." The target audience is the same as Amped's, law enforcement staff.

The message of building "video literacy" is likely the more appropriate in this case. When investigators understand their tool, as well as the results that the tool displays, they can make an informed decision. "Video literacy" as a goal, empowers investigators to engage with analysts - teaching them the language and foundations of the evidence they're viewing.

In the competition between the two tools, Replay and Input-Ace, it seems that the market is deciding. To be sure, competition is a good thing. Competition drives innovation and speeds development. Occam, in partnership with Cellebrite, is offing courses on it's tools all around the US and the world. Learners gain a thorough introduction to the tool, as well as how it's positioned within the processing workflow.

For those learners wanting to dive deeper into the underlying science, standards, and procedures for the analysis of this vital evidence (non-tool specific), my fundamentals course is offered on-line as micro learning. Once your eyes are opened to the tools and the evidence types, you'll need to understand the discipline at a deeper level. Moving this course on-line assists investigators and analysts in achieving this goal on their schedule, and at an affordable price.

If you've examined the evidence, and want a full analysis but lack the tools, that's where I can help. Again, as a certified analyst, I'm open for business. Contact me and let me know how I can assist you and your case.

Have a great day my friends.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Tuesday, December 3, 2019

End of the Year Sale

I'm here to help.

Learn the whole workflow - from unplayable file, to clarified and restored video that plays back in court. For those with limited training and travel budgets, our popular course, Introduction to Forensic Multimedia Analysis with Amped FIVE is now available on-line as self-paced micro learning for an amazingly low introductory price of $495.00.

Click here to sign up today for Introduction to Forensic Multimedia Analysis with Amped FIVE - on-line.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Tuesday, November 26, 2019

Sustainability and getting the most for your training and education dollars

Get the most out of your training budget and save the planet whilst doing it.

We live in interesting times. There's a couple of cultural themes that are dominating the planning sessions in government and private industry. Governments and private firms are concerned about sustainability and austerity.

In order to satisfy sustainability initiatives, agencies are restricting travel. Given that airline travel requires airplanes to consume carbon-based fuel in mass quantities, traveling to training is seen as damaging to the climate. Additionally, most government agencies will not allow a private firm to come in to a government facility and re-sell training seats in order to bring down the costs of training to small agencies. Thus, small agencies are faced with not being able to travel, and not being able to host due to the costs of purchasing on-site training.

In terms of austerity, many states aren't doing well financially. The pension crisis is hitting, homelessness is a real problem, and governments are focusing their attention - and their budgets - on the many social problems they are facing. Agencies are tasked with doing more, with less funds with which to do it. The days of "baseline budgeting" are drawing to an end as state / local governments face severe shortfalls.

I've been providing on-site training for over a decade now. I can't quantify the amount of times I've been asked to hold a seat, or the times that a class was full on the one date that worked for a student who couldn't get their registration completed in time.

On-line training and education solves all of these problems. According to the U.S. Department of Education, the most common reasons providers cite for offering online courses are to meet student demand, provide access to those who can't get to campus, and to make more courses available.

We've heard you. We're here to help.

Yesterday, I announced that our most popular course - Introduction to Forensic Multimedia Analysis with Amped FIVE - has found a home in our state-of-the-art learning management system. By offering our Intro to FIVE course on-line:

- Travel is no longer a problem. Consider how much travel, lodging, per-diem costs and how many additional people can be trained at your agency with the savings.

- Time is no longer a problem. With micro learning, you learn at your own pace and on your own schedule. You can easily accommodate an on-line course of instruction into your daily schedule. Studies show, there's better retention of information in adult learners with micro learning.

- Sustainability is no longer a problem. With on-line training and education, the learning experience comes to you ... helping your agency and government meet it's sustainability goals.

With interactive text, videos, and assignments, the learning experience moves beyond a simple demonstration event (webinar / information session) to create real growth in your knowledge, skills, and experience.

I'm excited about this news. I hope that you are too.

You can find out more, or sign up today, by clicking here.

See you in class, my friends.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Labels:

Amped,

Amped FIVE,

education,

FIVE,

training

Monday, November 25, 2019

Introduction to Forensic Multimedia Analysis with Amped FIVE – Now On-Line

Learn Amped FIVE anywhere, anytime!

The most cost effective training on Amped FIVE available!

|

| The same great training, now offered on-line at a lower price. |

So many users of FIVE complained about the cost of travel to attend a training session. For others, they couldn't leave their state for training. Still others didn't have the time to dedicate a week off of work to go to training. I hear you. I'm here to help. My most popular course, Introduction to Forensic Multimedia Analysis with Amped FIVE is now available on-line in a state of the art Learning Management System as micro learning. That should help ease the pressure on your calendar and comply with your agency's travel restrictions. In order to ease the pressure on your budget, I'm offering this course for an amazingly low introductory price of US$ 995.00.

As vendors are raising prices, I'm looking for ways to deliver value and save you money.

Remember, this class serves as the entry point for those analysts involved in forensic image and video analysis, also known as Forensic Multimedia Analysis, and their use of FIVE as their software platform.

Sign-up today and take advantage of this incredible opportunity. To view the syllabus, click here.

And because someone will ask, this course not endorsed by or offered from Amped SRL, an Italian company. It’s offered from a US based business under the Fair Use doctrine, in the same way my Forensic Photoshop book was published and the accompanying training delivered. See (Apple v Franklin), 17 U.S.C. § 101. This course does not involve the distribution of software. And besides, we're moving our training offerings on-line to help municipalities meet their sustainability goals by reducing training travel. We're just trying to do our part to help the planet. Do your part and sign up today for Introduction to Forensic Multimedia Analysis with Amped FIVE – On-Line.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Thursday, November 21, 2019

Source Attribution as an Ultimate Opinion

An interesting new article is available from David H. Kaye in Jurimetrics: The Journal of Law, Science and Technology Vol. 60, No. 2, Winter 2020.

Abstract

For decades, scientists, statisticians, psychologists, and lawyers have urged forensic scientists who compare handwriting, fingerprints, fibers, electronic recordings, shoeprints, toolmarks, soil, glass fragments, paint chips, and other items to change their ways. At last it seems that “[t]he traditional assumption that items like fingerprints and toolmarks have unique patterns that allow experts to accurately determine their source . . . is being replaced by a new logic of forensic reporting.” With the dissemination of “probabilistic genotyping” software that generates “likelihood ratios” for DNA evidence, the logic is making its way into US courts.

Recently, the Justice Department’s Senior Advisor on Forensic Science commented on a connection between prominent statements endorsing the new logic and an old rule of evidence concerning “ultimate issues.” He suggested that “some people . . . would like to pretend that [Federal Rule of Evidence 704] doesn't exist, but it actually goes against that school of thought.” This essay considers the nexus between Rule 704 and forensic-science testimony, old and new. It concludes that the rule does not apply to all source attributions, and even when it does apply, it supplies no reason to admit them over likelihood statements. In ruling on objections to traditional source attributions buttressed by the many calls for evidence-centric presentations, courts would be remiss to think that Rule 704 favors either school of thought.

Important quote:

... Thus far, I have assumed that all source attributions are ultimate opinions as those words are used in Rule 704. The rule applies to any opinion from any witness—expert or lay—that “embraces an ultimate issue.” But the identity of the source of a trace is not necessarily an ultimate issue. The conclusion that the print lifted from a gun comes from a specific finger does not identify the murder defendant is the one who pulled the trigger. The examiner’s source attribution bears on the ultimate issue of causing the death of a human being, but the examiner who reports that the prints were defendant's is not opining that the defendant not only touched the gun (or had prints planted on it) but also pulled the trigger. Indeed, the latent print examiner would have no scientific basis for such an opinion on an element of the crime of murder..."

Download the full article here.

Have a great day, my friends.

Keywords: evidence, forensic science, identification, expert opinions, ultimate issues, Rule 704, likelihoods, individualization, probability

Abstract

For decades, scientists, statisticians, psychologists, and lawyers have urged forensic scientists who compare handwriting, fingerprints, fibers, electronic recordings, shoeprints, toolmarks, soil, glass fragments, paint chips, and other items to change their ways. At last it seems that “[t]he traditional assumption that items like fingerprints and toolmarks have unique patterns that allow experts to accurately determine their source . . . is being replaced by a new logic of forensic reporting.” With the dissemination of “probabilistic genotyping” software that generates “likelihood ratios” for DNA evidence, the logic is making its way into US courts.

Recently, the Justice Department’s Senior Advisor on Forensic Science commented on a connection between prominent statements endorsing the new logic and an old rule of evidence concerning “ultimate issues.” He suggested that “some people . . . would like to pretend that [Federal Rule of Evidence 704] doesn't exist, but it actually goes against that school of thought.” This essay considers the nexus between Rule 704 and forensic-science testimony, old and new. It concludes that the rule does not apply to all source attributions, and even when it does apply, it supplies no reason to admit them over likelihood statements. In ruling on objections to traditional source attributions buttressed by the many calls for evidence-centric presentations, courts would be remiss to think that Rule 704 favors either school of thought.

Important quote:

... Thus far, I have assumed that all source attributions are ultimate opinions as those words are used in Rule 704. The rule applies to any opinion from any witness—expert or lay—that “embraces an ultimate issue.” But the identity of the source of a trace is not necessarily an ultimate issue. The conclusion that the print lifted from a gun comes from a specific finger does not identify the murder defendant is the one who pulled the trigger. The examiner’s source attribution bears on the ultimate issue of causing the death of a human being, but the examiner who reports that the prints were defendant's is not opining that the defendant not only touched the gun (or had prints planted on it) but also pulled the trigger. Indeed, the latent print examiner would have no scientific basis for such an opinion on an element of the crime of murder..."

Download the full article here.

Have a great day, my friends.

Keywords: evidence, forensic science, identification, expert opinions, ultimate issues, Rule 704, likelihoods, individualization, probability

Wednesday, November 20, 2019

How do you explain the weight of evidence?

Learn the use of probability to explain the weight of evidence in the new Statistics for Forensic Analysts course, available on-line as micro learning. For more information, click here.

Sign up today and start learning statistical concepts as they relate to Forensic Science including standard error, standard deviation, confidence interval, significance level, likelihood ratio, probability, conditional probability, Bayes’ theorem, and odds.

Sign up today and start learning statistical concepts as they relate to Forensic Science including standard error, standard deviation, confidence interval, significance level, likelihood ratio, probability, conditional probability, Bayes’ theorem, and odds.

Tuesday, November 19, 2019

Redaction - where do you start?

Learn to redact multimedia evidence for court, media release, or standards compliance. Four new redaction courses are available on-line as micro learning featuring the most popular tools used for redaction. For more information, click here.

Sign up today and start learning to redact multimedia files. These courses will get you up to speed on the issues and the technology necessary to quickly and confidently tackle this complex task.

Sign up today and start learning to redact multimedia files. These courses will get you up to speed on the issues and the technology necessary to quickly and confidently tackle this complex task.

Monday, November 18, 2019

Gaps in vendor-specific training?

Did your tool-specific training in video forensics leave you with questions? Do you want to know more? Want to dive deep into the science of forensic multimedia analysis? Click here to sign up for Introduction to Forensic Multimedia Analysis, available on-line as micro learning.

This course is the perfect complement to your vendor's training courses, offering a deep dive into the science that helps you to answer those "why" questions, helping you become a better analyst and a more confident expert witness.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

This course is the perfect complement to your vendor's training courses, offering a deep dive into the science that helps you to answer those "why" questions, helping you become a better analyst and a more confident expert witness.

keywords: audio forensics, video forensics, image forensics, audio analysis, video analysis, image analysis, forensic video, forensic audio, forensic image, digital forensics, forensic science, amped five, axon five, training, forensic audio analysis, forensic video analysis, forensic image analysis, amped software, amped software training, amped five training, axon five training, amped authenticate, amped authenticate training

Friday, November 15, 2019

Windows Sandbox vs Virtual Machine

Recently, I spent a week in New Jersey teaching a week-long course on forensic multimedia analysis with Amped FIVE. On day four of the class, we spent the morning installing, configuring, and working within virtual machines.

I've been using Oracle's Virtual Box for a while now. It's what we were working with in the course. It's easy to set-up and use. Plus, it's free.

But, the inevitable question came up. Why not use the new Windows Sandbox feature instead of Virtual Box, or other VM?

In their specific case, the answer was easy - the computers in their training room would not support Sandbox. To use Sandbox, your computer must meet minimum specifications.

Yes, ICYMI, FIVE will run in a VM. I've installed FIVE in the popular VMs out there and it works just fine. The nice thing about FIVE is that it runs off a license key (dongle). With a VM, I can assign the USB port with the dongle to the VM to let FIVE run in the VM. Some of the other analysis programs out there require machine codes on installation, which will complicate matters. Some vendors allow only a single installation per license. With FIVE, you can install it everywhere. The dongle is portable. The software installation is quite agile.

In my laboratory work, I have VMs set up for specific cases. I also have VMs set up for specific codecs / players (like Walmart's Verint / March Networks codecs). I can save these VMs. I can share these VMs for discovery.

Not so with Sandbox. Sandbox is volatile. Once you shut it down, everything you were just doing is gone for good. But, don't worry about accidents. Microsoft warns you of this.

My worry with Sandbox is that I've created something for a case. Then, when I shut it down, I necessarily destroy it. I'm just not comfortable with that. Thus, I still use Virtual Box.

Additionally, with a Virtual Box, I set things up once. Then, in the case of test/validate, I can use the space multiple times if needed. With Sandbox, I must set things up from scratch each time. Such a waste IMHO.

That's not even considering Windows stability issues and crashes. There's no "auto recovery" feature to Sandbox if the host OS crashes.

I've been using Oracle's Virtual Box for a while now. It's what we were working with in the course. It's easy to set-up and use. Plus, it's free.

But, the inevitable question came up. Why not use the new Windows Sandbox feature instead of Virtual Box, or other VM?

In their specific case, the answer was easy - the computers in their training room would not support Sandbox. To use Sandbox, your computer must meet minimum specifications.

- Windows 10 Pro or Enterprise build 18305 or later

- AMD64 architecture

- Virtualization capabilities enabled in BIOS

- At least 4GB of RAM (8GB recommended)

- At least 1 GB of free disk space (SSD recommended)

- At least 2 CPU cores (4 cores with hyperthreading recommended).

If your computer is capable of running Sandbox, setting it up is as simple as turning the feature on in the Windows Features dialog box.

|

| Turn Windows Sandbox on in the Windows Features dialog box. |

The training machines at this agency were 32bit with only 4GB of RAM.

Yes, ICYMI, FIVE will run in a VM. I've installed FIVE in the popular VMs out there and it works just fine. The nice thing about FIVE is that it runs off a license key (dongle). With a VM, I can assign the USB port with the dongle to the VM to let FIVE run in the VM. Some of the other analysis programs out there require machine codes on installation, which will complicate matters. Some vendors allow only a single installation per license. With FIVE, you can install it everywhere. The dongle is portable. The software installation is quite agile.

|

| FIVE running inside of Windows Sandbox |

Not so with Sandbox. Sandbox is volatile. Once you shut it down, everything you were just doing is gone for good. But, don't worry about accidents. Microsoft warns you of this.

|

| Windows Sandbox warning about losing everything once you close the window. |

Additionally, with a Virtual Box, I set things up once. Then, in the case of test/validate, I can use the space multiple times if needed. With Sandbox, I must set things up from scratch each time. Such a waste IMHO.

That's not even considering Windows stability issues and crashes. There's no "auto recovery" feature to Sandbox if the host OS crashes.

The other nice thing about VirtualBox are extension packs. The main extension pack from Oracle extends USB functionality within your VM. "VirtualBox Extension Pack" adds support for USB 2.0 & 3.0 devices such as network adapters, flash drives, hard disks, web cams, etc., that are inserted into physical USB ports of the host machine. These can then be attached to the VM running on VirtualBox. As a result, you can use a physical USB device in a guest operating system. There are other extension packs, with new ones being developed all the time.

If you haven't tried working in virtual machines, give it a go for yourself. If you'd like hands-on guidance, you're welcome to sign up for one of our upcoming training sessions. Check the calendar on our web site for available dates. We teach VMs within the Advanced Processing Techniques course. If you don't see a date that works for you on our calendar, but want to schedule a class, contact us about bringing a course to your agency or adding a course to our local training calendar ... or about our new micro learning options for self-directed learners.

Have a great day, my friends.

If you haven't tried working in virtual machines, give it a go for yourself. If you'd like hands-on guidance, you're welcome to sign up for one of our upcoming training sessions. Check the calendar on our web site for available dates. We teach VMs within the Advanced Processing Techniques course. If you don't see a date that works for you on our calendar, but want to schedule a class, contact us about bringing a course to your agency or adding a course to our local training calendar ... or about our new micro learning options for self-directed learners.

Have a great day, my friends.

Thursday, November 14, 2019

Deep-fakes, Photoshopping, and the Post-Truth Society.

With deep fake videos & Photoshopped images, how do you know you can trust what you see and hear? Learn the science of authentication in this new Forensic Multimedia Authentication course. It's available on-line as micro learning. Click here for more information.

Remember, it’s not “just video.” Often times, it's the only witness to the events of the day. Please don’t let expediency guide your path. “Video evidence is a lot like nitroglycerin: Properly handled, it can demolish an opposing counsel’s case. Carelessly managed, it can blow up in your face.”

Remember, it’s not “just video.” Often times, it's the only witness to the events of the day. Please don’t let expediency guide your path. “Video evidence is a lot like nitroglycerin: Properly handled, it can demolish an opposing counsel’s case. Carelessly managed, it can blow up in your face.”

Monday, October 21, 2019

Right to Silence and the collection of evidence

When I retired from police service, I had spent a third of my life working for the LAPD. One of the questions that someone with my resume will eventually face is the balance of case types worked, the implication being that I would have mostly worked for the prosecution of criminal defendants. But, in Los Angeles County, there's an over 90% plea rate. In reality, I worked on the major criminal cases about 50% of the time, with the remainder working in the defence of the my self-insured city. I've also worked in the defence of officers falsely accused of perjury (People v Abdullah) and have donated a lot of time for case review in support of Innocence Project type cases.

If you're an American, you will be more familiar with the Miranda warning than the warning shown above from the UK. But, I think the Miranda warning, and the 5th Amendment in general, is setting people up for failure in successfully defending themselves in court (assuming they're innocent of all charges). Here's what I mean.

Americans generally believe that it's up to the State to prove guilt. Whilst this is true in theory, there are so many stories about innocent people pleading guilty to charges based on a "risk assessment" that they make about their potential to succeed at trial. The Prosecution has their theory of a case. The State's investigative might has been focussed on the Prosecution's theory in the collection of evidence. They're not necessarily concerned with the collection of alibi evidence for you. They may stumble upon potentially exculpatory evidence, but that's not their job - it's yours.

This is where I think the UK's standard admonition is more honest. Sure, you have the Right to Silence. But, by remaining silent, you may actually harm your defence.

If you are innocent, you know where you've been, with whom you've associated during the time in question, places you've visited, and etc. Your defence team must begin it's own evidence collection process. Your movements throughout the day all leave traces - the store, the gas station, the coffee shop, highway tolls, and etc. There's very little about one's life these days that isn't tracked or recorded. In addition to CCTV video of you going about your day, your phone has likely recorded even more details about where you've been and what you've been doing. Now, there's even the personal home assistants like Alexa and Siri that can serve as a witness to your movements.

All of this data must be preserved. However, the average person doesn't have the resources or the know-how to collect and preserve digital evidence properly. Some items, like cell tower logs, may require a warrant to acquire. You must have a capable and aggressive lawyer working on this for you.

I'm here to help. I've seen all sides of this and have been working on standards for digital evidence collection and processing for well over a decade. I've taught classes to public and private audiences on this as well (click here for more info).

Yes, in the US we have the presumption of innocence. But, the best defense is a good offense. You must go on the offense and collect the evidence that proves your innocence. The evidence shows that it will harm your defence if you don't.

Tuesday, October 15, 2019

Tuesday, October 8, 2019

Mind the Communication Gap

I received a panicked email and phone call last week that can easily be summarized by the graphic above. Yes, there are still vendors selling to the police services that don't understand or accommodate the agencies needs or schedules.

As most in government service know, often the ability to spend money on tools and training happens within a short window of time. Such a window had opened for this person and they reached out to a company in response to a bit of marketing on new redaction functionality in a particular piece of software.

In the email that was shared with me, the requestor made it clear that they wanted to redact footage from body worn cameras. A trial code was shared for the company's tool. The requestor eagerly downloaded and installed the software, then assembled his supervisors and stakeholders to evaluate the new software. That's when the problems began.

Redacting the visual information was relatively straight forward, but cumbersome. However, he couldn't figure out how to redact the audio portion of the file. He reached out to the company's rep. Unfortunately, it was late on Friday in California. He received no reply from the company's rep, who'd likely gone home for the weekend. Thus, having previously communicated with me on technology issues, he reached out hoping that I'd still be around.

I let him know that to the best of my knowledge, none of Amped SRL's products redact audio. Yes, they'd promised audio redaction in FIVE at the 2016 LEVA conference. But, it's never materialized. As it's not been developed, it's quite likely that there is no audio redaction functionality in Replay.

He received a note from the sales rep on Monday. It informed him that the redaction functionality was built around a CCTV use case, and as such, did not concern itself with audio. The order taker that responded to the inquiry could have saved the requestor a ton of time and stress by simply reading the request - redaction of BWC footage - adding a helpful disclaimer in the reply that the tech doesn't redact audio.

Redaction remains a huge issue in California. Agencies are looking for a quick fix, but no easy solutions have presented themselves. The marketing from Amped seemed to provide a glimmer of hope to the requestor. But those hopes were dashed when the advice from the order taker, three days late, was that there is no single redaction solution - which isn't entirely true.

As I informed the requestor on Friday evening, the Adobe Creative Suite has all the necessary tools to perform a standards-compliant redaction for California's new laws. The automatic tracking in PremierePro is the best currently available. Plus, at $52.99/month, the cost savings is substantial vs. FIVE or Replay.

Given that the requestor had until 1700 PDT to spend the allocated funds, not receiving a reply until the following Monday was not an option. Thankfully, I was able to answer his questions and get him on the right path. Next stop for him is my redaction class, featuring the Adobe tools (link).

Have a great day friends.

As most in government service know, often the ability to spend money on tools and training happens within a short window of time. Such a window had opened for this person and they reached out to a company in response to a bit of marketing on new redaction functionality in a particular piece of software.

In the email that was shared with me, the requestor made it clear that they wanted to redact footage from body worn cameras. A trial code was shared for the company's tool. The requestor eagerly downloaded and installed the software, then assembled his supervisors and stakeholders to evaluate the new software. That's when the problems began.

Redacting the visual information was relatively straight forward, but cumbersome. However, he couldn't figure out how to redact the audio portion of the file. He reached out to the company's rep. Unfortunately, it was late on Friday in California. He received no reply from the company's rep, who'd likely gone home for the weekend. Thus, having previously communicated with me on technology issues, he reached out hoping that I'd still be around.

I let him know that to the best of my knowledge, none of Amped SRL's products redact audio. Yes, they'd promised audio redaction in FIVE at the 2016 LEVA conference. But, it's never materialized. As it's not been developed, it's quite likely that there is no audio redaction functionality in Replay.

He received a note from the sales rep on Monday. It informed him that the redaction functionality was built around a CCTV use case, and as such, did not concern itself with audio. The order taker that responded to the inquiry could have saved the requestor a ton of time and stress by simply reading the request - redaction of BWC footage - adding a helpful disclaimer in the reply that the tech doesn't redact audio.

Redaction remains a huge issue in California. Agencies are looking for a quick fix, but no easy solutions have presented themselves. The marketing from Amped seemed to provide a glimmer of hope to the requestor. But those hopes were dashed when the advice from the order taker, three days late, was that there is no single redaction solution - which isn't entirely true.

As I informed the requestor on Friday evening, the Adobe Creative Suite has all the necessary tools to perform a standards-compliant redaction for California's new laws. The automatic tracking in PremierePro is the best currently available. Plus, at $52.99/month, the cost savings is substantial vs. FIVE or Replay.