The presenter outlined his workflow for working with video from a few different sources. The presentation turned to the difference between how Direct Show handles video playback vs. what's actually in the data stream. The presenter showed how Direct Show may not give you the best version of the data to work with. If you've been around LEVA for a while, you likely know this already. It's good information to know.

Then the presenter did a comparison of a corrected frame from the data stream vs. a frame from the video as played via the Direct Show codec - in Photoshop. He made an interesting statement that prompted me to write this post. He was clicking between the layers so that viewers could "see" that there was a difference between the two frames. The implication was that the viewer could clearly "see" the difference. He was making the point that one frame had more information / a more clear display of the information - illustrating it visually (demonstratively).

This got me thinking - does he know how people "see?"

I'll get to the difference between his workflow (using many tools) and Five (using one tool) in a bit. But first, I want to address his point about "clearly seeing."

I do not hide the fact that I am autistic. Thanks to the changes in the DSM, my original brain wiring diagnoses that were made during the reign of DSM IV now place me firmly on the autism spectrum in DSM V. Sensory Processing Disorder and Prosopagnosia have made life rather interesting; especially growing up in a time when doctors didn't understand these wiring issues at all. As an analyst, they present challenges. But they also present opportunities. Can a person doing manual facial comparison be accused of bias if they're face blind? Not sure. Never been asked. But I digress.

Let's not forget that much of what we do, the majority of the work involves some sort of visual examination. According to just about every agency's entry rules, examiners must have normal color vision, depth perception, and sufficiently good corrected vision. Vision is acceptable if it is 20/20 or better uncorrected. If vision is uncorrected at 20/80 or better and can be corrected to 20/20 by the use of glasses or hard contacts, it is acceptable. If vision is uncorrected at 20/200 or better and can be corrected to 20/20 by the use of soft contacts, it is acceptable. Vision surgically corrected (such as by radial keratotomy or Lasik) to 20/20 is acceptable once visual acuity has stabilized. All of this is to say, how your mechanical devices (eyes, ears, brain) interact with your environment helps to form what you "know."

I've spent my academic life studying the sensory environment. My PhD dissertation focusses on the college sensory environment that is so hostile, autistic college students would rather drop out than stick it out. But again, I've studied and written extensively on the issue of what people perceive, so the presenter's statement struck me.

It also struck me from the standpoint of what we "know" vs. what we can prove.

The presenter took viewers on quite a tour of a hex editor, GraphStudio, his preferred "workflow engine," and Photoshop before making the statement that prompted this post. A lot of moving parts. Along the way, the story of how information is displayed and why it's important to "know" where differences can occur was driven home.

Yes, we can all agree that there are differences between how Direct Show shows things and how a raw look at the video shows things. It may be helpful to "see" this. But what if you don't perceive the world in the same way as the story teller.

Might there be another way to perform this experiment that doesn't rely on the viewer's perception matching that of the presenter?

Thankfully, with FIVE, there is.

The presenter started with Walmart (SN40) video being displayed via Direct Show. So, I'll start there too. SN40, via Direct Show, displays as 4 CIF.

Then, I used FIVE's conversion engine to copy the stream data into a fresh container.

It displays as 2 CIF.

I selected the same frame in each video and bookmarked them for export.

I brought these images back into FIVE for analysis.

The issue with 2 CIF is that, in general, every other line of resolution isn't actually recorded and needs to be restored via some valid and reliable process. FIVE's Line Doubling filter allows me to restore these lines. I can choose the interpolation method during this process. The presenter in the video chose a linear interpolation method to restore the lines (in Photoshop - not his "workflow engine"), so I did the same.

I've now restored the stream copy frame. I wanted not only to "see" the difference between frames ("what I know"), I wanted to compute the difference between frames ("what I can prove").

Again, staying in FIVE, I linked the the Direct Show frame with the Stream Copy frame with the Video Mixer (found in the Link filter group).

The filter settings for the Video Mixer contains three tabs. The first tab (Inputs) allows the user to choose which processing chains to link, and at what step in the chain.

The second tab (Blend) allows the user to choose what is done with these inputs. In our case, I want to Overlay the two images.

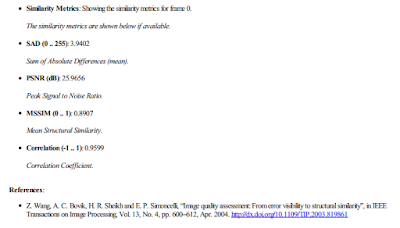

The third tab (Similarity) is where we transition from the demonstrative to the quantitative. Unlike Photoshop's Difference Blend Mode, FIVE doesn't just display a difference (is there a threshold where difference is present but not displayed by your monitor?) it computes similarity metrics.

With the Similarity Metrics enabled, FIVE computes the Sum of Absolute Difference (SAD), the Peak Signal to Noise Ratio (PSNR), Mean Structural Similarity, and the Correlation Coefficient. The actual difference, computed 4 different ways. You don't just "see" or "know" - you prove.

The reporting of this is done at the click of the mouse. FIVE has been keeping track of your processing and the results are complied and produced on demand - no typing your report. (My arthritic fingers thank the programmers each day.)

Total time for this experiment was under 5 minutes. I'm sure the presenter could have been faster than was displayed in the webinar, he was explaining things. But, he used a basket of tools - some free and some not free. He also didn't take the viewers time to compile an ASTM 2825-12 compliant report. Given the many tools used, I'm not sure how long that takes him to do.

When considering his proposed workflow, you need to consider the total cost of ownership of the whole basket as well as the cost of training on those tools. You also can factor how much time is spent/saved doing common tasks. I've noted before that prior to having FIVE, I could do about 6 cases per day. With FIVE, I could do about 30 per day. Given the amount of work in Los Angeles, this was huge.

For my test, I used one tool - Amped FIVE. I could do everything the presenter was doing in one tool, and more. I could move from the demonstrative to the quantitative - in the same tool.

Now, to be fair, I am retired now from police service and work full time for Amped Software. OK. But, the information presented here is reproducible. If you have FIVE and some Walmart video, you can do the same thing in this one amazing tool. Because I come from LE, I am always evaluating tools and workflows in terms of total cost of ownership. Money for training and tech is often hard to come by in government service and one wants the best value for the money spent. By this metric, Amped's tools and training offer the best value.

If you want more information about Amped's tools or training, head over to the web site.

No comments:

Post a Comment